This article was originally published by the author on Medium. We are republishing it here.

Many people have this question in mind when they deploy Kubernetes cluster. While I was delivering a training on k8s, one of the participants asked this question, but he asked it a bit early when I was explaining each component of k8s cluster i.e. api-server, controller, scheduler, etcd, kube-proxy, kube-dns and kubelet etc.

With the promise to answer him after explaining how we can create pods in k8s cluster and how each and every component works to deploy a pod on the scheduled node I continued. That moment a thought struck me and I thought to write about this to answer everyone facing same.

Download our ebook – A Deep-Dive On Kubernetes For Edge, focuses on current scenarios of adoption of Kubernetes for edge use cases, latest Kubernetes + edge case studies, deployment approaches, commercial solutions and efforts by open communities.

Above mentioned k8s components can run as system service or pods in k8s cluster. When we deploy a cluster using “kubeadm init†all these components except kubelet runs as pod and only kubelet runs as system service, so the question arises… WHY?

Okay, so to understand the reason we need to understand how a pod is deployed in k8s cluster, but since this blog is not to discuss this in detail (I am planning to write an entire series on “Kubernetes†and “Networking and container security using CNI in k8s cluster†stay tuned for that) hence for now let’s discuss the only reason for kubelet to run as system service.

I am assuming that you have some basic knowledge about k8s and how it works. Let’s take a quick recap about the events while deploying a pod is below:

- When you deploy a pod in k8s cluster API serverreceives REST request from client i.e. kubectl in most cases, you can write your own client as well using “k8s.io/client-go/Kubernetes†package.

- Apiserver forwards this request for authentication/authorization (and some additional module like mutating admission controller/webhook, schema validation, validation admission controller, etc.) and store resource into etcd the centralize datastore in Kubernetes.

- Once resource (here resource means Pod) gets stored in etcd, scheduler (another pod running in a cluster) gets a notification (How? all control plane component keeps watch on API server, hence they get notification for the events they subscribe) and it schedule the pod on one of the suitable nodes in the cluster.

- Once scheduler assigns the node to the pod now etcd have updated pod spec with node assign. hence API server sent an event to kubelet running on that particular node.

- And now the answer starts of the question asked i.e. “why kubelet runs as a system service?â€. Kubelet receives the pod details and create a sandbox container and attach the volume to it to run an application container. It also calls IPAM and CNI to provide networking to this sandbox container. And then it downloads the docker image mentioned in the pod spec and then runs a container inside the pod. So, in a simple term, kubelet is the creator (one who creates) of pod on a specific node, so how can it be a pod. Let’s make it clearer if you still are dicey about this.

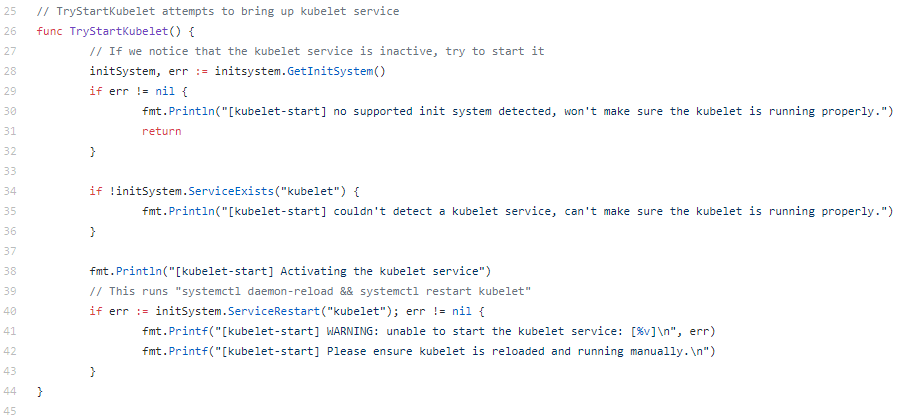

Whenever you deploy k8s cluster using “kubeadm init†on master node, the very first thing kubeadm spawn is kubelet as a systemd service see the below code present in k8s repo.:

From the above code, kubeadm first call GetInitSystem() on line 28 to get InitSystem for the current system and then it checks whether kubelet is running or not on line 34 by method ServiceExists(“kubeletâ€) and then it calls ServiceRestart(“kubeletâ€) on line 40 to start kubelet as systemd service.

Once kubelet is running, then kubeadm deploy other control plane component (apiserver, etcd, kube-proxy, scheduler, etc.) as pod.

In the same way, when you run “kubeadm join†on a worker node, it spawns kubelet on the worker node also.

Hope now you can understand why we cannot run kubelet as pod/daemonsets in k8s cluster and it runs as a system service.

If you have some more thoughts or have some observations on the details I shared above, please add the same in the comment section.

If you are new to k8s or container then read my other small article on the same: https://link.medium.com/iblDswkzzZ