Virtualization has had a valuable impact on businesses and has been a predominant technology for a decade. Being able to centrally manage, maintain and create a consistent view of the developed product was the key aspect of Virtualization, not only this but reducing business cost to a significant level by making use of resource sharing or hardware virtualization is what VMware has managed to pull off in vSphere suite. Although in this fast-paced IT industry where new technologies are introduced at short intervals, keeping a product equipped with all the latest stacks is quite impossible. Now we have the shift happening where the focus from Hardware Virtualization is now shifting to OS virtualization in other words “Containerizationâ€. Containers run on top of an Operating System by sharing the host kernel and other resources in common. This not only makes an application to be more lightweight but at the same time reduces the need for Hypervisor which itself can be resource-intensive.

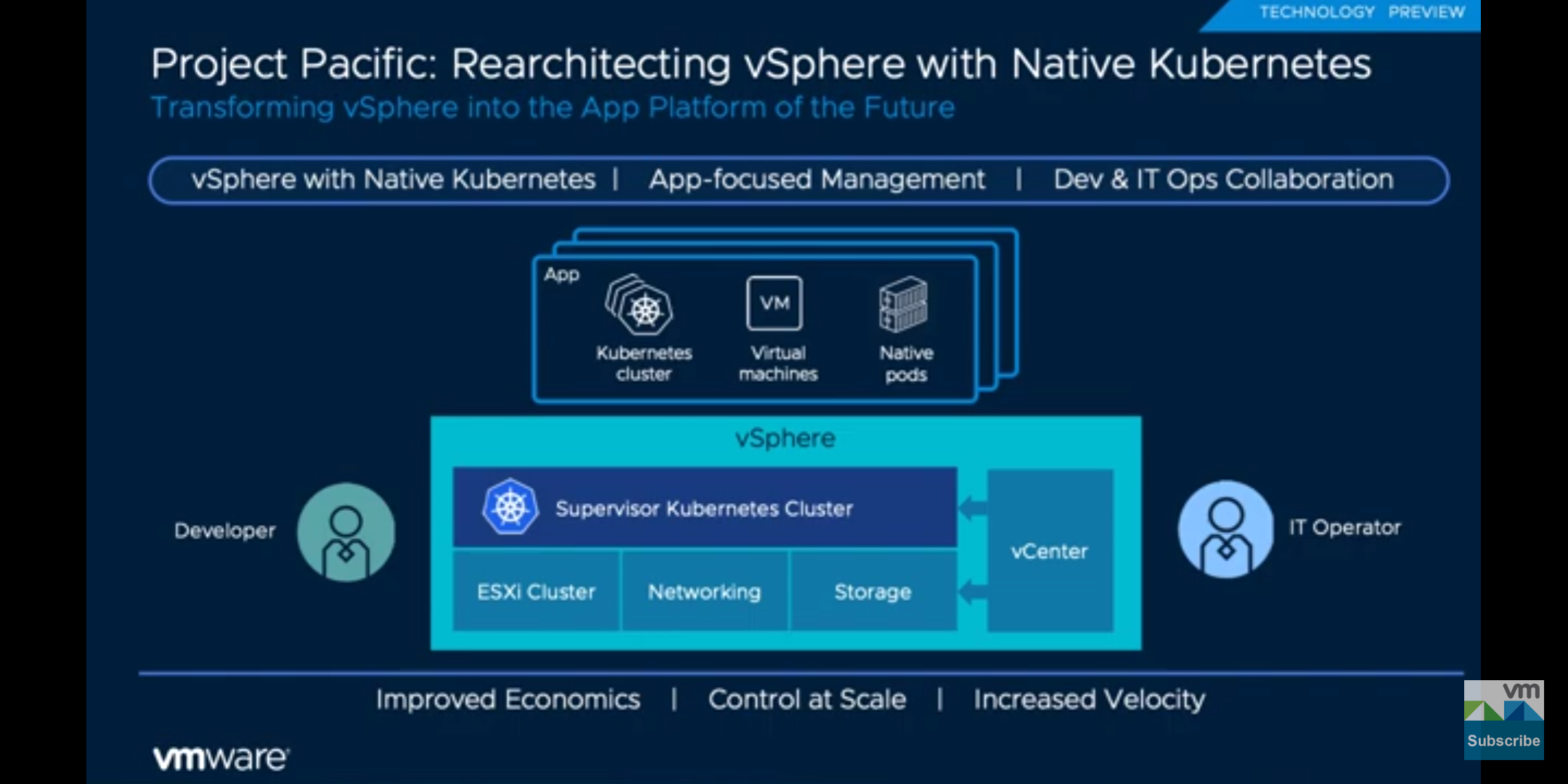

Project Pacific is built with an intent to integrate the power of “Kubernetes – A container orchestration tool†within the existing vSphere stack. Although this is not just adding a Kubernetes component on top of vSphere, but includes integration to a certain depth where Kubernetes and vSphere can talk to each other. This project addresses the issue of Modern Applications which are hybrid in nature i.e. it consists of a Kubernetes cluster, serverless functions and VM’s. Because not everything can be migrated to containers with ease. Project Pacific includes customized Kubernetes implementation of existing Kubernetes components such as Kubenet (now Spherelet) and many new CRDs (Custom Resource Definitions) and Controllers.

Source: blogs.vmware.com

Demystifying the architecture:

Most of the components in vSphere stack are still intact, this project is not about reinventing the wheel whereas the focus is to incorporate the flexibility and power of Kubernetes.

The components that are newly added to help developers get a unified interaction with the k8s and SDDC infrastructure using Kubernetes API; Whereas the IT admins now have a Namespaced view of the vSphere infrastructure in the vCenter. Following are the components

- Supervisor Cluster: VMware has completely and natively integrated Kubernetes into vSphere. This is called the Supervisor Cluster. This component includes the newly added VM operator capable of deploying a VM as a resource, which is a game-changer.

- CRX Runtime: Workloads deployed on the Supervisor, including Pods, each run in their own isolated VM on the hypervisor. To accomplish this we have added a new container runtime to ESXi called the CRX. The CRX is like a virtual machine that includes a Linux kernel and minimal container runtime inside the guest.

- Spherelet: The supervisor is a special kind of Kubernetes cluster that uses ESXi as its worker nodes instead of Linux. This is achieved by integrating a Kubenet (VMware’s implementation is called the Spherelet) directly into ESXi. The Spherelet doesn’t run in a VM, it runs directly on ESXi.

Kubernetes as a platform – Modern Application’s view.

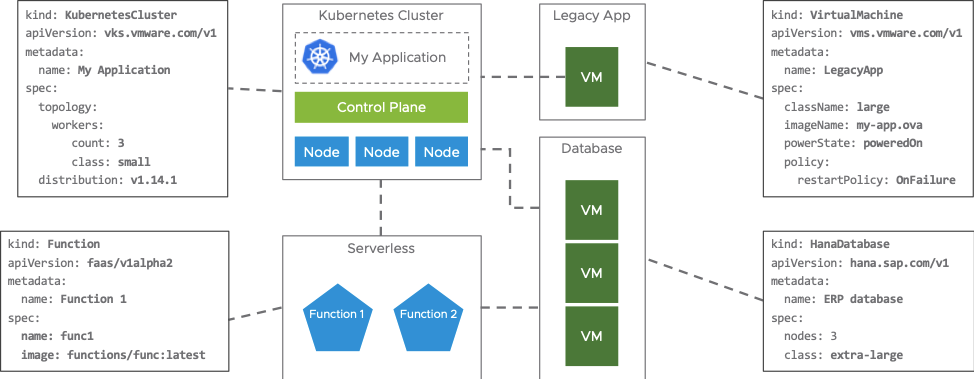

The newly re-architecture vSphere enables developers to specify just the required specification file or a manifest which then deploys the complex infrastructure using the various CRD’s, Controllers. As Kubernetes is also responsible for maintaining the desired state of the resources, a developer-only needs to focus on his application while using the power of newly added CRDs and controllers in Project Pacific takes care of the rest.

Boon for IT admins and Developers:

Kubernetes is not just a container orchestrator; it can orchestrate anything as in this case we have VM’s hence coined as “Platform Platform†where it can be leveraged as a platform for other platforms. As modern applications are quite complex nowadays where we have legacy apps running in VMs, databases as separate entities and considering the trend we have containers ecosystem running, as an IT admin things can be pretty complex to manage security, QOS, compliance on these varying stacks, whereas for developers developing, testing and deploying apps can be a major overhead.

To address these concerns concept of Namespace at ESXi level is introduced where admins can enforce policies, QoS and other monitoring aspects on a Namespace.

In short, Namespace is the unit of governance. Whereas using the exposed Kubernetes API developers can interact with the infrastructure with the roles and permission they are granted while getting admitted to the namespace.

Conclusion:

This project is Open source has a lot of potentials to improve, custom CRDs and Controllers can be written to integrate new tools and stacks into the vSphere infrastructure as an instance creating a MongoDB CRD, controller that can deploy a MongoDB instance by just specifying the details in the manifest and the controller can then keep a watch on the desired state.