Kubernetes Introduction

Kubernetes (K8S) is an open-source system for automating deployment, scaling, and management of containerized applications.

It groups containers that make up an application into logical units for easy management and discovery.

Running Kubernetes Cluster on Public Cloud:

While trying to deploy Kubernetes cluster on Public Cloud like azure, there are two options:

- Deploy K8S from scratch using ACS engine on azure.

- Use one of the turnkey methods like Flannel based or Weave based.

There are many resources describing the Flannel based and the Weave based turnkey solutions for deployment of K8S on Azure, however very few available on deployment of K8S from scratch using ACS engine on azure.

We present a technique of end-to-end deployment of K8S from scratch using ACS engine on azure.

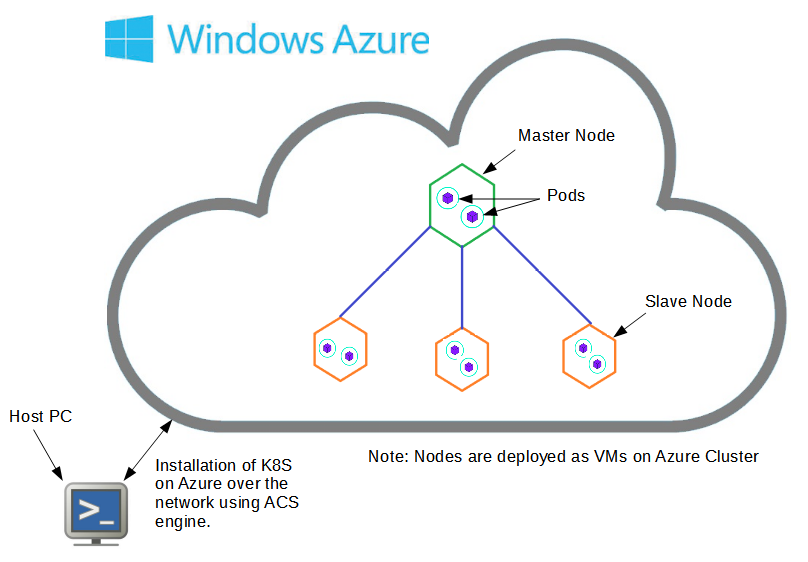

Fig: Installation of K8S on Azure over the network using ACS Engine.

Steps of Kubernetes deployment on Azure

Pre-Requisite:

Installation of git, Python 2.7.9 or greater, docker (latest version).

- Installation of Azure CLI on linux host VMAs they say “A great cloud needs a great tools†and hence we need to install azure cli on the linux host VM using,

$ curl -L https://aka.ms/InstallAzureCli | bash

- Installation of Azure CLI on linux host VM

Pre-Requisite:

It needs golang being installed on the linux VM host.$ apt-get install golangSteps for installation of ACS engine on linux host VM.

- Setup go path using,

$ mkdir $HOME/go

- Edit

$HOME/.bash_profile

and add the following lines to setup your go path

$ export PATH=$PATH:/usr/local/go/bin $ export GOPATH=$HOME/go note: if ~/.bash_profile is missing than add the above lines to the ~/.bashrc file. $ source $HOME/.bash_profile

- Perform some preliminary steps in order to achieve the binary of the acs-engine.

- Get the local copy of the acs-engine git hub project.

$ go get github.com/Azure/acs-engine

- Change the path to,

$ cd $GOPATH/src/github.com/Azure/acs-engine

- Install glide using;

$ sudo add-apt-repository ppa:masterminds/glide $ sudo apt-get install glide $ sudo apt-get update $ glide create $ glide install $ sudo make $ sudo apt-get update

- Get the binary of the go-bindata;

$ go get -u github.com/jteeuwen/go-bindata/… $ cd ~/GOPATH/bin Verify that go-bindata is available in this location. $ sudo make (To build the binary of the acs-engine) Verify that binary of the acs-engine is created at the location; $ ls GOPATH/src/github.com/Azure/acs-engine/bin

- Get the local copy of the acs-engine git hub project.

- Setup go path using,

- Run the acs-engine binary to get the azure command-line parameters.

$ cd GOPATH/src/github.com/Azure/acs-engine/bin $ ./acs-engine

- Generating the service PrincipalKubernetes uses a Service Principal to talk to Azure APIs to dynamically manage resources such as User Defined Routes and L4 Load Balancers.Using Azure CLI:

$ az login $ az account set –subscription=“${SUBSCRIPTION_ID}†$ az ad sp create-for-rbac –role=“Contributor†--scopes=“/subscriptions/${SUBSCRIPTION_ID}â€

This will output your appId, password, name, and tenant. The name or appId may be used for the servicePrincipalProfile.servicePrincipalClientId and the password is used for servicePrincipalProfile.servicePrincipalClientSecret

Confirm your service principal by opening a new shell and run the following commands substituting in name, password, and tenant:

$ az login --service-principal -u NAME -p PASSWORD –tenant TENANT $ az vm list-sizes --location westus (Note: replace westus by <resource group location name>

- Create a new kubernetes.json file under the example directory in the following path

$GOPATH/src/github.com/Azure/acs-engine/examples

Add the following parameters:

- SSH Public keys under the values for the “keyDataâ€.This can be obtained from the

$ cat ~/.ssh/id_rsa.pub

- Add values for the keys “servicePrincipalClientID” and for “servicePrincipalClientSecret”.

- Add values for “dns-prefix†key under master profile.

- Add private ip’s for the master nodes and for the slave nodes under the master profile and in the agent profile.

- SSH Public keys under the values for the “keyDataâ€.This can be obtained from the

- Generate the new Template deployment

Run$./bin/acs-engine generate examples/kubernetes.json

to generate the templates in the _output/Kubernetes-UNIQUEID directory. The UNIQUEID is a hash of your master’s FQDN prefix.

- Deploying with Azure CLI 2.0

The generated templates from the step 5 i.e. azuredeploy.json and azuredeployparameters.json in the _output/Kubernetes-UNIQUEID directory can now be used using azure cli to deploy the cluster. - Verification

To Verify your deployment using below command:

SSH to k8s-master (use Public IP address or DNS name)ssh azureuser@Execute below commands and see the output:

kubectl get nodes # list all nodes in k8s cluster kubectl get services --all-namespaces

[Tweet “Installation of #Kubernetes on #Azure ~ via @CalsoftInc”]